KaaS Support

Rackspace Kubernetes-as-a-Service

Last updated: Feb 11, 2022

Release: v6.0.2

This section is a quick reference for Rackspace support engineers who have questions about Rackspace Kubernetes-as-a-Service (KaaS). The section includes the information about monitoring, troubleshooting, and upgrading a Rackspace KaaS cluster.

- Preface

- Getting started with Kubernetes

- Common customer support operations

- Network configuration

- Add resource limits to applications

- Troubleshooting

- Kubernetes tips, tricks, and one-liners

- Rackspace Kubernetes-as-a-Service architecture

- Upgrade Rackspace KaaS

- Recover from a stack update

- Use a private Docker image registry

- SSL certificates rotation

- Backups and disaster recovery

- Document history and additional information

- Disclaimer

Getting started with Kubernetes

KaaS is based on upstream Kubernetes, and many upstream concepts and operations apply to Kubernetes clusters deployed by KaaS. If you are new to Kubernetes, use these resources to get started:

- Kubernetes basics

- Deploy an application

- Explore your application

- Expose your application publicly

- Scale your application

- Update your application

Common customer support operations

This section includes information about customer support operations for Rackspace Kubernetes-as-a-Service (KaaS).

- Create a monitoring suppresion

- Resize a Kubernetes cluster

- Replace a Kubernetes node

- Replace an etcd node

- Replace a load balancer

- Update the rpc-environments repository

Create a monitoring suppression

Before performing maintenance, create a new suppression for the monitored environments:

- Log in to the Rackspace Business Automation (RBA) portal.

- Go to Event Management -> Suppression Manager 3.0 -> Schedule New Suppression.

- Fill in each of the tabs. Use the correct account, ticket, and device numbers.

- Confirm that the suppression is added to the maintenance ticket.

Resize a Kubernetes cluster

You can resize a Kubernetes cluster by adding or removing Kubernetes worker nodes, etcd nodes, or other nodes.

The kaasctl cluster scale command is under development. For emergency resize operations, use the procedure described in K8S-2052.

Replace a Kubernetes node

If one of the nodes in the Kubernetes cluster fails, you can replace it by using the kaasctl cluster replace-node command.

To repair a failed Kubernetes node, run the following commands:

- If you have not yet done so, start an interactive Docker session as described in Start an interactive Docker session.

- View the list of nodes in your cluster:

kaasctl cluster list-nodes <cluster-name>

Example:

kaasctl cluster list-nodes kubernetes-test

NodeID ProviderID Name Type

openstack_compute_instance_v2.etcd.0 2371c70b-d8e7-44e9-ab9b-6ff3b5d8cc7c kubernetes-test-etcd-1 etcd

openstack_compute_instance_v2.etcd.1 ed664934-0ae4-4532-83a9-305a2f9e1e7c kubernetes-test-etcd-2 etcd

openstack_compute_instance_v2.etcd.2 431aa4cc-10d2-46d2-be36-85ebaeb619e3 kubernetes-test-etcd-3 etcd

openstack_compute_instance_v2.k8s_master_no_etcd.0 11b5c173-d5be-4bf4-9a2a-1bebbd9a70f8 kubernetes-test-k8s-master-ne-1 master

openstack_compute_instance_v2.k8s_master_no_etcd.1 6fcaca4a-502d-40b9-bd7f-1e47a98458cb kubernetes-test-k8s-master-ne-2 master

openstack_compute_instance_v2.k8s_master_no_etcd.2 73c4952d-b02a-4493-a188-592f6f56dd2b kubernetes-test-k8s-master-ne-3 master

openstack_compute_instance_v2.k8s_node_no_floating_ip.0 ae106171-4ee1-4e2b-9478-bcdb242b8f01 kubernetes-test-k8s-node-nf-1 worker

openstack_compute_instance_v2.k8s_node_no_floating_ip.1 c9a74608-27f3-4370-a2b6-5531a0b2a288 kubernetes-test-k8s-node-nf-2 worker

openstack_compute_instance_v2.k8s_node_no_floating_ip.2 8473185a-43bf-472e-bc8a-bb93611b889f kubernetes-test-k8s-node-nf-3 worker

openstack_compute_instance_v2.k8s_node_no_floating_ip.3 b0e7ed19-42ee-45ba-b371-1a70bffc4ed5 kubernetes-test-k8s-node-nf-4 worker

- Replace a failed node by using the NodeID from the output of the

kaasctl cluster list-nodescommand:

kaasctl cluster replace-node <cluster-name> <NodeID>

Example:

kaasctl cluster replace-node kubernetes-test openstack_compute_instance_v2.k8s_node_no_floating_ip.0

The resource openstack_compute_instance_v2.k8s_node_no_floating_ip.0 in the module root.compute has been marked as tainted!

Initializing modules...

- module.network

- module.ips

- module.compute

- module.loadbalancer

- module.dns

Initializing provider plugins...

- When prompted, type

yes.

Enter a value:Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value:

This operation might take up to 15 minutes.

Example of system response:

...

Gathering Facts ----------------------------------------------------- 3.33s

fetch admin kubeconfig from Kube master ----------------------------- 2.18s

fetch Kube CA key from Kube master ---------------------------------- 2.09s

fetch Etcd CA cert from Kube master --------------------------------- 2.07s

fetch Etcd client cert from Kube master ----------------------------- 2.05s

fetch Kube CA cert from Kube master ----------------------------------2.05s

fetch Etcd client key from Kube master -------------------------------1.95s

write updated kubeconfig to file -------------------------------------0.82s

download : Sync container --------------------------------------------0.80s

download : Download items --------------------------------------------0.77s

use apiserver lb domain name for server entries in kubeconfig --------0.46s

read in fetched kubeconfig -------------------------------------------0.25s

kubespray-defaults : Configure defaults ------------------------------0.16s

download : include_tasks ---------------------------------------------0.10s

- Exit the interactive Docker session:

exit

- Verify the replaced node operation:

kubectl get nodes -o wide

kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

kubernetes-test-k8s-master-ne-1 Ready master 21h v1.11.5 146.20.68.57 Container Linux by CoreOS 1855.4.0 (Rhyolite) 4.14.67-coreos docker://18.6.1

kubernetes-test-k8s-master-ne-2 Ready master 21h v1.11.5 146.20.68.54 Container Linux by CoreOS 1855.4.0 (Rhyolite) 4.14.67-coreos docker://18.6.1

kubernetes-test-k8s-master-ne-3 Ready master 21h v1.11.5 146.20.68.76 Container Linux by CoreOS 1855.4.0 (Rhyolite) 4.14.67-coreos docker://18.6.1

kubernetes-test-k8s-node-nf-1 Ready node 21h v1.11.5 <none> Container Linux by CoreOS 1855.4.0 (Rhyolite) 4.14.67-coreos docker://18.6.1

kubernetes-test-k8s-node-nf-2 Ready node 21h v1.11.5 <none> Container Linux by CoreOS 1855.4.0 (Rhyolite) 4.14.67-coreos docker://18.6.1

kubernetes-test-k8s-node-nf-3 Ready node 21h v1.11.5 <none> Container Linux by CoreOS 1855.4.0 (Rhyolite) 4.14.67-coreos docker://18.6.1

kubernetes-test-k8s-node-nf-4 Ready node 21h v1.11.5 <none> Container Linux by CoreOS 1855.4.0 (Rhyolite) 4.14.67-coreos docker://18.6.1

NOTE: If you want to replace more than one node, you need to run this command for each node.

Replace a master node

Master node replacement is currently not implemented.

Replace an etcd node

If one of the nodes in the etcd cluster fails, you can mark it as unhealthy by using the Terraform taint command, replace the node, and then rerun the deployment of the unhealthy components. First, identify the node that needs to be replaced and then run the taint command so that Terraform applies the necessary changes to the cluster.

To repair an unhealthy etcd node, run the following commands:

-

If you have not yet done so, start an interactive Docker session as described in Start an interactive Docker session.

-

Change the current directory to the directory with the

terraform.tfstatefile. Typically, it is located in/<provider-dir>/clusters/<cluster-name>. -

Run the

terraform taintcommand:

terraform taint -module='compute' '<etcd-node-name>'

Example for an OpenStack environment:

terraform taint -module='compute' 'openstack_compute_instance_v2.etcd.4'

- Redeploy the infrastructure:

kaasctl cluster create <cluster-name> --infra-only

- Verify the nodes that

kaasctlreplaces in the output and, when prompted, typeyes. - Remove the failed etcd member from the cluster:

i. Connect to the etcd master node by using SSH. Use the id_rsa_core key stored in <provider-dir>/clusters/<cluster-name>.

ii. Change the directory to /etc/kubernetes/ssl/etcd/.

iii. Get the list of endpoints:

ps -ef | grep etcd

iv. Get the status of the endpoints:

ETCDCTL_API=3 etcdctl --endpoints <list-of-endpoints> --cacert="ca.pem" --cert="<cert-name>.pem" --key="<key>.pem" endpoint health

Example:

ETCDCTL_API=3 etcdctl --endpoints https://10.0.0.6:2379,https://10.0.0.13:2379,https://10.0.0.14:2379,https://10.0.0.9:2379,https://10.0.0.7:2379 --cacert="ca.pem" --cert="node-kubernetes-test-k8s-master-ne-1.pem" --key="node-kubernetes-test-k8s-master-ne-1-key.pem" endpoint health

v. Get the list of cluster members:

ETCDCTL_API=3 etcdctl --endpoints <list-of-endpoints> --cacert="ca.pem" --cert="<cert-name>.pem" --key="<key>.pem" member list

Example:

ETCDCTL_API=3 etcdctl --endpoints https://10.0.0.6:2379,https://10.0.0.13:2379,https://10.0.0.14:2379,https://10.0.0.9:2379,https://10.0.0.7:2379 --cacert="ca.pem" --cert="node-kubernetes-test-k8s-master-ne-1.pem" --key="node-kubernetes-test-k8s-master-ne-1-key.pem" member list

Correlate the IP of the unhealthy endpoint above with the correct member.

vi. Remove the unhealthy etc member by using the hash for the correct member:

ETCDCTL_API=3 etcdctl --endpoints <list-of-endpoints> --cacert="ca.pem" --cert="<cert-name>.pem" --key="<key>.pem" member remove <hash>

Example:

ETCDCTL_API=3 etcdctl --endpoints https://10.0.0.6:2379,https://10.0.0.13:2379,https://10.0.0.14:2379,https://10.0.0.9:2379,https://10.0.0.7:2379 --cacert="ca.pem" --cert="node-kubernetes-test-k8s-master-ne-1.pem" --key="node-kubernetes-test-k8s-master-ne-1-key.pem" member remove 503e0d1f76136e08

- Terminate the connection to the master node.

- Start the

kaasctlDocker interactive session. - Recreate the cluster by running:

kaasctl cluster create <cluster-name> --skip-infra

- Log in to the master node.

- Verify the etcd node status by running the

etcdctl endpoint healthandetcdctl member listcommands.

NOTE: If you want to replace more than one etcd node, you must perform this full procedure for each node.

Replace a load balancer in OpenStack

By default, Rackspace KaaS deploys the following load balancers:

- The Kubernetes API (deployed by using Terraform - all others are deployed by using Kubernetes)

- Ingress Controller

- The Docker registry

All load balancers that are deployed outside of the rackspace-system namespace are managed by the customer.

If a load balancer fails or is in an unrecoverable state, use the openstack loadbalancer failover <lb-id> to replace it. This command works for OpenStack Queens or later.

To replace a load balancer, complete the following steps:

- Replace a load balancer:

openstack loadbalancer failover <lb-id>

- Optionally, verify the Kubernetes operation by using

kubectl:

kubectl get nodes

The command returns a list of Kubernetes nodes.

Update the rpc-environments repository

Every time you make a change to the Terraform environment, such as replacing a node or a load balancer, you must update the rpc-environments repository with the new Terraform *.tfstate file.

To update the *.tfstate file in the rpc-environments repository:

- Copy the

<provider-dir>/clusters/<cluster-name>/terraform-<cluster>.tfstateto/tmp. - Find the customer’s vault encryption password in the PasswordSafe project.

- Encrypt the

*.tfstatefile copy in/tmpwithansible-vault:

root@infra01:/tmp$ ansible-vault encrypt /tmp/terraform-my-cluster.tfstate

New Vault password:

Confirm New Vault password:

Encryption successful

- Create a PR in the customer’s project in the

https://github.com/rpc-environments/<customer-id>repository with the updated file.

Network configuration

This section describes some of the KaaS networking concepts, such as network policies, traffic flow, and so on.

- Configure network policies

- Network traffic flow in a worker node

- Routing table

- Analyze IP packets using tcpdump

Configure network policies

A network policy is a specification of how groups of pods are allowed to communicate with each other and other network endpoints. Network policies are supported in Kubernetes with certain Container Network Interface (CNI) providers, such as Calico and Canal.

By default, Kubernetes clusters are shipped with the Calico CNI provider, which implements network policies.

Calico pods must be running when you execute the following kubectl command:

kubectl -n kube-system get pods | grep calico

kube-calico-gvqpd 2/2 Running 0 1h

kube-calico-hkwph 2/2 Running 0 1h

kube-calico-hp8hv 2/2 Running 0 1h

kube-calico-jlqxg 2/2 Running 0 1h

kube-calico-p0kl9 2/2 Running 0 1h

kube-calico-pkf1f 2/2 Running 0 1h

Verify a network policy

By default, Calico allows all ingress and egress traffic to go in and out of Pods. To see network policies in action, follow the instructions in this demo guide. In addition, see Configure network policies.

Network traffic flow in a worker node

During the deployment, the Kubernetes Installer creates many network ports on the Kubernetes worker node. The following table describes these ports and their function:

Worker node networking ports

| Network interface | Description |

|---|---|

calicNNN | Ports created by Calico for Kubernetes pods. Every time the Kubernetes Installer or you create a pod, Calico creates a calicNNN network interface and connects it to the container by using the Container Network Interface (CNI) plugin. |

docker0 | An interface that is created by default during the cluster deployment, but is not used for any traffic. |

kube-ipvs0 | An IP Virtual Server (IPVS) interface that kube-proxy uses to distribute traffic through the Linux Virtual Server (LVS) kernel module. |

eth0 | An Ethernet interface that the worker node uses to connect to other nodes in the Kubernetes cluster, OpenStack network, and the external network. |

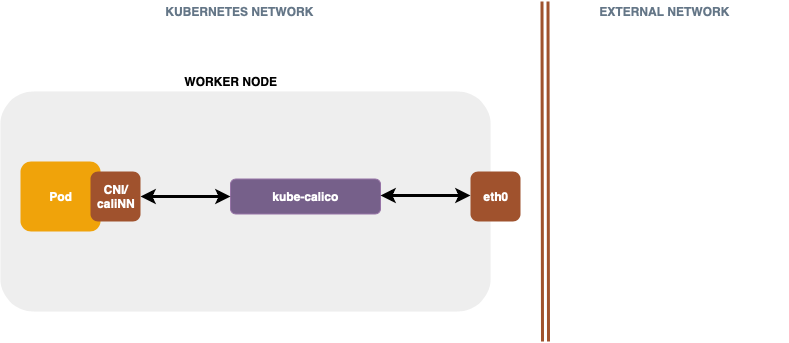

KaaS deploys kube-calico pods that are responsible for network requests management on each worker node. kube-calico is responsible for setting up a Border Gateway Protocol (BGP) mesh between each node while also being responsible for applying related network policies.

The following diagram describes traffic flow from the pod’s network interface to the eth0:

kube-calico configures routing and other important networking settings. In the diagram above, network traffic goes from the pod’s caliNNN interface to kube-calico, which applies network policies, such as blocking certain network ports and so on. From kube-calico, the traffic goes straight to eth0 and is routed as the next hop to Calico on the destination host.

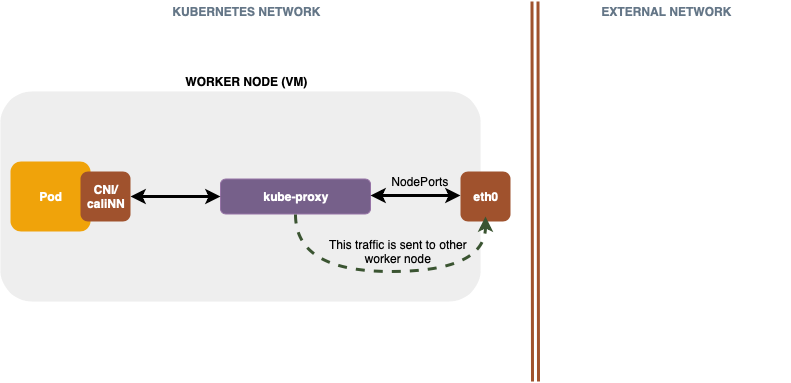

Another way of processing traffic inside a worker is by using kube-proxy. kuber-proxy acts as an internal load balancer and processes network traffic for Kubernetes services with Type=NodePorts, such as MySQL, Reddis, and so on.

The following diagram describes traffic flow from the eth0 to kube-proxy.

In the diagram above, kube-proxy receives traffic and sends it either to a pod on this worker node or back to eth0 and to another worker node that has other replicas of that pod to process the request.

For more information about how kube-proxy uses LVS and IPVS, see IPVS-Based In-Cluster Load Balancing Deep Dive.

Routing table

Calico is responsible for delivering IP packets to and from Kubernetes pods. When a pod sends an Address Resolution Protocol (ARP) request, Calico is always the next IP hop on the packet delivery journey. Calico applies the iptables rules to the request and then sends them to the other pod directly, kube-proxy, or sends them outside the Kubernetes cluster through the tenant network. The routing information is stored in a Linux routing table on the Calico worker node.

The following text is an example of a routing table on a worker node:

$ route

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc pfifo_fast state UP group default qlen 1000

link/ether fa:16:3e:bf:cb:fc brd ff:ff:ff:ff:ff:ff

inet 10.0.0.6/24 brd 10.0.0.255 scope global dynamic eth0

valid_lft 76190sec preferred_lft 76190sec

inet6 fe80::f816:3eff:febf:cbfc/64 scope link

valid_lft forever preferred_lft forever

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:48:7e:36:f2 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

inet6 fe80::42:48ff:fe7e:36f2/64 scope link

valid_lft forever preferred_lft forever

6: kube-ipvs0: <BROADCAST,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN group default

link/ether 76:42:b6:e4:24:cf brd ff:ff:ff:ff:ff:ff

inet 10.3.0.1/32 brd 10.3.0.1 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.0.3/32 brd 10.3.0.3 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.55.252/32 brd 10.3.55.252 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.248.85/32 brd 10.3.248.85 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 172.99.65.142/32 brd 172.99.65.142 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.125.227/32 brd 10.3.125.227 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.201.201/32 brd 10.3.201.201 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.92.5/32 brd 10.3.92.5 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.169.148/32 brd 10.3.169.148 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.131.23/32 brd 10.3.131.23 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.202.25/32 brd 10.3.202.25 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.232.245/32 brd 10.3.232.245 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.249.193/32 brd 10.3.249.193 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.198.106/32 brd 10.3.198.106 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.81.64/32 brd 10.3.81.64 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.66.106/32 brd 10.3.66.106 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.121.31/32 brd 10.3.121.31 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.13.82/32 brd 10.3.13.82 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.156.254/32 brd 10.3.156.254 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.179.85/32 brd 10.3.179.85 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.20.113/32 brd 10.3.20.113 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.94.143/32 brd 10.3.94.143 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.188.170/32 brd 10.3.188.170 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.10.65/32 brd 10.3.10.65 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.171.165/32 brd 10.3.171.165 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.141.154/32 brd 10.3.141.154 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.159.18/32 brd 10.3.159.18 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.35.42/32 brd 10.3.35.42 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.248.76/32 brd 10.3.248.76 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.133.73/32 brd 10.3.133.73 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.65.201/32 brd 10.3.65.201 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.118.116/32 brd 10.3.118.116 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.82.120/32 brd 10.3.82.120 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.223.10/32 brd 10.3.223.10 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet 10.3.52.96/32 brd 10.3.52.96 scope global kube-ipvs0

valid_lft forever preferred_lft forever

inet6 fe80::7442:b6ff:fee4:24cf/64 scope link

valid_lft forever preferred_lft forever

9: caliac52d399c5c@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

10: cali94f5a40346e@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 1

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

11: calif9c60118c9d@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 2

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

12: cali12440b20ff9@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 3

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

13: cali646ffb1b67d@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 4

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

14: cali315a9d1eed9@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 5

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

15: cali3913b4bb0e1@if3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 6

inet6 fe80::ecee:eeff:feee:eeee/64 scope link

valid_lft forever preferred_lft forever

All internal tenant network traffic is served inside of the 10.0.0.0 network (kube-proxy) through the kube-ipvs0 interface. kube-proxy creates IPVSs for each Service IP address respectively.

In the example above, Calico uses the 169.254.169.254 gateway to apply its iptables rules.

The following text is an example of a routing table on a pod:

/ # route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 169.254.1.1 0.0.0.0 UG 0 0 0 eth0

169.254.1.1 * 255.255.255.255 UH 0 0 0 eth0

In the example above,169.254.1.1 is the Calico gateway.

Analyze IP packets using tcpdump

You can use the tcpdump tool to analyze IP packets transmitted to and from pods on a Kubernetes node.

To use tcpdump, complete the following steps:

- If you want to generate network traffic for testing purposes, install the

net-toolsprobe on your node:

apiVersion: apps/v1

kind: Deployment

metadata:x

name: net-tools-deployment

labels:

app: net-tools

spec:

replicas: 1

selector:

matchLabels:

app: net-tools

template:

metadata:

labels:

app: net-tools

spec:

containers:

- name: nettools

image: raesene/alpine-nettools

- Determine the worker node on which the pod that you want to monitor is running.

- Log in to the pod:

Example:

(kubernetes-xgerman/rackspace-system) installer $ kubectl -it exec prometheus-k8s-1 /bin/sh

Example of system response:

Defaulting container name to prometheus.

Use 'kubectl describe pod/prometheus-k8s-1' to see all of the containers in this pod.

In the example above, we log in to a Prometheus pod.

- List all IP addresses on the pod’s network interface:

/prometheus $ ip a

Example of system response:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop qlen 1000

link/ipip 0.0.0.0 brd 0.0.0.0

4: eth0@if15: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1400 qdisc noqueue

link/ether 22:77:54:21:7c:0f brd ff:ff:ff:ff:ff:ff

inet 10.2.5.10/32 scope global eth0

valid_lft forever preferred_lft forever

- Remember the network address for

eth0. - Ping . he pod from the probe.

Example:

5676-c4h6z /bin/sh

/ # ping 10.2.5.10

Example of system response:

PING 10.2.5.10 (10.2.5.10): 56 data bytes

64 bytes from 10.2.5.10: seq=0 ttl=62 time=1.725 ms

64 bytes from 10.2.5.10: seq=1 ttl=62 time=1.250 ms

64 bytes from 10.2.5.10: seq=2 ttl=62 time=1.122 ms

64 bytes from 10.2.5.10: seq=3 ttl=62 time=1.369 ms

64 bytes from 10.2.5.10: seq=4 ttl=62 time=0.771 ms

64 bytes from 10.2.5.10: seq=5 ttl=62 time=0.725 ms

- Log in to the worker node.

- Validate that traffic gets to the node:

sudo tcpdump -i eth0 icmp

Example of system response:

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on flannel.1, link-type EN10MB (Ethernet), capture size 262144 bytes

21:35:34.662425 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 60, length 64

21:35:34.662483 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 60, length 64

21:35:35.662860 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 61, length 64

21:35:35.663682 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 61, length 64

21:35:36.663004 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 62, length 64

21:35:36.663086 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 62, length 64

21:35:37.663531 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 63, length 64

21:35:37.663596 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 63, length 64

21:35:38.663694 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 64, length 64

21:35:38.663784 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 64, length 64

21:35:39.663464 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 65, length 64

21:35:39.663556 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 65, length 64

21:35:40.664055 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 66, length 64

21:35:40.664141 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 66, length 64

- Get the name of the pod (

caliNNN) network interface by its IP address:

Example:

route | grep 10.2.5.10

Example of system response:

10.2.5.10 0.0.0.0 255.255.255.255 UH 0 0 0 calie2afdf225c0

-

Validate the traffic on the pod’s (

caliNNN) network interface:Example:

sudo tcpdump -i calie2afdf225c0 icmp

Example of system response:

dropped privs to tcpdump

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on calie2afdf225c0, link-type EN10MB (Ethernet), capture size 262144 bytes

21:37:36.693484 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 182, length 64

21:37:36.693544 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 182, length 64

21:37:37.693764 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 183, length 64

21:37:37.693802 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 183, length 64

21:37:38.693562 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 184, length 64

21:37:38.693601 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 184, length 64

21:37:39.693902 IP 10.2.3.15 > 10.2.5.10: ICMP echo request, id 11520, seq 185, length 64

21:37:39.693943 IP 10.2.5.10 > 10.2.3.15: ICMP echo reply, id 11520, seq 185, length 64

Add resource limits to applications

Resource limits are a critical part of a production deployment. Without defined limits, Kubernetes overschedules workloads, leading to “noisy neighbor” problems. Such behavior is particularly troublesome for workloads that come in bursts, which might expand to use all of the available resources on a Kubernetes worker node.

Adding resource limits

Kubernetes has the following classes of resource constraints: limit and request. The request attribute is a soft limit, which might be exceeded if extra resources are available. The limit attribute is a hard limit, which prevents scheduling if worker nodes do not have enough resources available. The limit resource might cause termination of an application if the application attempts to exceed the configured cap.

These limits are specified in the container specification.

Example:

apiVersion: v1

kind: Pod

metadata:

name: frontend

spec:

containers:

- name: db

image: mysql

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

Determining resource limits

Profiling actual usage is the best way to set resource limits. Deploy the application without setting any resource limits, run it through a typical set of tasks, and then examine the resource usage in the monitoring dashboard. You might want to set limits somewhat higher than the observed usage to ensure optimal provisioning. Limits can later be tuned downward if necessary.

Troubleshooting

This section describes how to troubleshoot issues with your Kubernetes cluster, managed services, and underlying components of the Rackspace KaaS solution.

- Basic troubleshooting

- Troubleshooting Rackspace KaaS

- Troubleshooting etcd

- Troubleshooting octavia

- Troubleshooting Kubespray

Basic troubleshooting

Maintainers

- Shane Cunningham (@shanec)

To get help from the maintainers, contact them in #kaas by their Slack name.

Kubernetes

Connect to a Kubernetes node

You can access the Kubernetes master nodes by using floating IPs (FIPs).

- To discover the FIPs for the Kubernetes master nodes, set the OpenStack tooling aliases.

- List your OpenStack servers:

$ openstack server list -c Name -c Status -c Networks

+--------------------------+--------+--------------------------------------------------+

| Name | Status | Networks |

+--------------------------+--------+--------------------------------------------------+

| etoews-rpc1-iad-master-0 | ACTIVE | etoews-rpc1-iad_network=10.0.0.14, 172.99.77.130 |

| etoews-rpc1-iad-worker-0 | ACTIVE | etoews-rpc1-iad_network=10.0.0.6 |

| etoews-rpc1-iad-worker-1 | ACTIVE | etoews-rpc1-iad_network=10.0.0.9 |

| etoews-rpc1-iad-master-2 | ACTIVE | etoews-rpc1-iad_network=10.0.0.7, 172.99.77.107 |

| etoews-rpc1-iad-master-1 | ACTIVE | etoews-rpc1-iad_network=10.0.0.12, 172.99.77.100 |

| etoews-rpc1-iad-worker-2 | ACTIVE | etoews-rpc1-iad_network=10.0.0.10 |

+--------------------------+--------+--------------------------------------------------+

- Export the

SSH_OPTSandMASTER_FIPvariables:

$ export SSH_OPTS="-i clusters/${K8S_CLUSTER_NAME}/id_rsa_core -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no"

$ export MASTER_FIP=172.99.77.130

- Connect to the Kubernetes master node:

$ ssh ${SSH_OPTS} core@${MASTER_FIP}

- Get the

kubeletlogs:

core@clustername-master-0 ~ $ journalctl -u kubelet

By default, Kubernetes worker nodes are not publicly available as they do not have an assigned floating IP address (FIP). To connect to a Kubernetes worker node, first copy the SSH private key located in clusters/*clustername*/id_rsa on the machine from which you deployed the Kubernetes cluster (your laptop or an OpenStack infrastructure node) to a Kubernetes master node and all worker nodes. Then, you can connect to a Kubernetes worker node from the Kubernetes master node.

$ scp ${SSH_OPTS} clusters/${K8S_CLUSTER_NAME}/id_rsa_core core@${MASTER_FIP}:.

$ ssh ${SSH_OPTS} core@${MASTER_FIP}

core@clustername-master-0 ~ $ ssh -i id_rsa_core core@<worker-ip>

core@clustername-worker-0 ~ $ journalctl -u kubelet

Diagnose a Kubernetes cluster

You can use the cluster-diagnostics.sh script to get the information about a Kubernetes cluster.

hack/support/cluster-diagnostics.sh

To simplify log gathering, we recommend that you install this gist CLI.

To use the gist CLI, run the following commands:

gist --login

gist --private --copy clusters/${K8S_CLUSTER_NAME}/logs/*.log

The command above uploads the logs to a private. gist and copies the gist URL to your clipboard.

If the Kubernetes API is reachable, the script uses heptio/sonobuoy to gather diagnostics about the cluster. The collected data is accumulated in the clusters/$K8S_CLUSTER_NAME/logs/sonobuoy-diagnostics.$(date +%Y-%m-%dT%H:%M:%S).tar.gz archive. For more information about the contents of the tarball, see the Sonobuoy documentation.

The script uploads the cluster-diagnostics, sonobuoy-diagnostics, and kubernetes-installer logs and tarballs to Cloud Files. However, for this to work you need to configure the K8S_CF_USER and K8S_CF_APIKEY variables from PasswordSafe. You might also need to pull the latest OpenStack CLI client image.

Known Issues

Warning, FailedMount, attachdetach-controller, AttachVolume.Attach, Invalid request due to incorrect syntax or missing required parameters.

An issue with attachdetach-controller might result in the following error message:

kubectl get events --all-namespaces

NAMESPACE LAST SEEN FIRST SEEN COUNT NAME KIND SUBOBJECT TYPE REASON SOURCE MESSAGE

monitoring 32m 1d 72 prometheus-customer-0.15333bb2a31a0e4f Pod Warning FailedMount attachdetach-controller AttachVolume.Attach failed for volume "pvc-c29aceb0-6385-11e8-abfd-fa163eea581f" : Invalid request due to incorrect syntax or missing required parameters.

If you see a message like the one described above in the event log while the volume is bound to a node and mounted to a pod or container, then your Kubernetes cluster might have other issues unrelated to the volume. For more information, see K8S-1105.

Managed services

Image registry

The image registry is implemented using VMware Harbor. For more information, see the VMware Harbor documentation.

Harbor is a subsystem made of highly-coupled microservices. We recommend that you read Harbor architecture overview to understand the product better.

Harbor’s architecture has evolved since the documentation was written and it is still in transition. VMware is adding and changing services constantly and it might take some time until the final architecture is implemented. You might want to read the updated architecture overview PR that can help you understand some of the newer components and all of the coupling.

See also:

- Walkthrough of Harbor running on Managed Kubernetes in Harbor Registry Walkabout.

- The script associated with Harbor Registry Walkabout.

Image registry database

To access the image registry database, run the following commands:

$ REGISTRY_MYSQL_POD_ID=$(kubectl get pods -n rackspace-system | grep registry-mysql | awk '{print $1}')

$ kubectl exec ${REGISTRY_MYSQL_POD_ID} -it -n rackspace-system -- bash

# mysql --user=root --password=$MYSQL_ROOT_PASSWORD --database=registry

mysql> show tables;

mysql> select * from alembic_version;

Elasticsearch

Index pruning

To debug the index pruning, find the latest job run by using the following commands:

$ kubectl get jobs | grep curator

es-purge-1497635820 1 0 3m

$ job=curator-1497635820

$ pods=$(kubectl get pods --selector=job-name=${job} --output=jsonpath={.items..metadata.name})

$ kubectl logs $pods

OpenStack

Connect to an OpenStack environment data plane

Complete the following steps:

-

Connect to the VPN.

-

Choose the OpenStack env from one of the

<env>.<region>.ohthree.comtabs in the Managed Kubernetes PasswordSafe and find the 10.x.x.x IP and root password.- If you need one of the other physical hosts, try searching for the inventory file for your environment and host. If you cannot find it, ask one of the maintainers.

-

Connect to the

control-1node of the OpenStack control plane:

ssh [email protected]

- View each of the control plane services by typing the following commands:

lxc-ls

- Connect to the required service by SSH using the service name. For example:

ssh control-1_cinder_api_container-972603b0

- View the service logs in

/var/log/<servicename>/. For example:

ls /var/log/cinder/

OpenStack tooling

Set up the aliases that can run the OpenStack client to help you troubleshoot your RPC environment using the following commands:

alias osc='docker run -it --rm --volume ${PWD}:/data --env-file <(env | grep OS_) --env PYTHONWARNINGS="ignore:Unverified HTTPS request" quay.io/rackspace/openstack-cli'

alias openstack='osc openstack --insecure'

alias neutron='osc neutron --insecure'

You can use the following aliases:

openstack server list

neutron net-list

OpenStack monitoring

To view the monitoring information for a particular RPCO environment, follow these steps:

- Log in to https://monitoring.rackspace.net using your Rackspace SSO.

- Search for the account environment ID. For example:

rpc1.kubernetes.iad.ohthree.com: 4958307 - Click on Rackspace Intelligence in the navigation bar to access the Cloud Monitoring. You can view checks without leaving to Rackspace Intelligence.

Delete OpenStack resources

Ocasionally, when you delete a Kubernetes cluster, it might result in orphaned resources. To remove these resources, use the following options:

- Delete. Use this command, if you cannot connect to the kubernetes API server.

kaasctl cluster delete <cluster-name>

- OpenStack Dashboard. You can try to delete Kubernetes resources using the Horizon UI. However, its functionality might be limited.

Map OpenStack servers to physical hosts

- Log in to the RPC environment using the admin credentials from one of the

*.kubernetes.ohthree.comtabs in the Managed Kubernetes PasswordSafe. - In the left hand navigation menu, select Admin > Instances.

- In the upper-right corner, filter the instances using

Name =from the dropdown. The physical host is listed in the Host column.

Out of Memory, Kubenetes node is in NotReady status

If you found a node that is in the NotReady status, you can analyze the log file of this machine for potential problems by performing these steps:

- Log in to the OpenStack Horizon UI.

- Go to Project -> Compute -> Instances.

- Select the instance name -> Log -> View full log.

- If you see Memory cgroup out of memory in the log file, this might be the reason of the node in

NotReadystatus. Reboot the node.

- If you see Memory cgroup out of memory in the log file, this might be the reason of the node in

RPC dashboards

The following dashboards deployed in rpc1.kubernetes.iad.ohthree.com might help you troubleshoot issues with your Kubernetes cluster:

-

Elasticsearch, Logstash, and Kibana (ELK):

-

Telegraf, InfluxDB, Grafana, and Kapacitor (TGIK):

Telegraf, InfluxDB, Grafana, and Kapacitor (TGIK) is a new addition to RPC Monitoring-as-a-Service (MaaS). TGIK is a time-series platform that collects metrics from the MaaS plugins and stores them in InfluxDB. Rackspace KaaS uses Grafana to display these time series metrics. In this release, the system, network, and summary dashboards are supported.

- Access: https://rpc1.kubernetes.iad.ohthree.com:8089

- Credentials: None. If you want your own user created contact one of the maintainers.

Troubleshooting Rackspace KaaS

Table of Contents

- DNS

- Logs

- Attach to a pod

- Checking the running configuration

- Namespaces

- Failure domains

- Troubleshooting unresponsive Kubernetes services

DNS

Rackspace Kubernetes-as-a-Service (KaaS) uses OpenStack DNS-as-a-Service (Designate) that provides REST APIs for record management, as well as integrates with the OpenStack Identity service (keystone). When Kubernetes Installer creates Kubernetes nodes, it adds DNS records about the nodes in the Designate DNS zone. Kubernetes Installer also adds records for Ingress Controller, Docker registry, and the Kubernetes API endpoints.

You can use the following commands to retrieve information about your Kubernetes cluster:

- Use the

hostlookup utility to check the IP address of your Kubernetes master node:

$ host shanec-cluster-master-0.pug.systems

shanec-cluster-master-0.pug.systems has address 148.62.13.55

- Connect to the Kubernetes master node using SSH:

$ ssh -i id_rsa_core [email protected]

Last login: Fri Jun 23 16:49:09 UTC 2017 from 172.99.99.10 on pts/0

Container Linux by CoreOS beta (1409.1.0)

Update Strategy: No Reboots

core@shanec-cluster-master-0 ~ $

Each cluster has its own SSH key credentials in PasswordSafe. The default user in Container Linux is core.

You can apply this operation to all Kubernetes master nodes in the cluster:

$ kubectl get nodes

NAME STATUS AGE VERSION

shanec-cluster-master-0 Ready 31m v1.6.4+coreos.0

shanec-cluster-master-1 Ready 31m v1.6.4+coreos.0

shanec-cluster-master-2 Ready 31m v1.6.4+coreos.0

shanec-cluster-worker-0 Ready 31m v1.6.4+coreos.0

shanec-cluster-worker-1 Ready 31m v1.6.4+coreos.0

shanec-cluster-worker-2 Ready 31m v1.6.4+coreos.0

Logs

The Rackspace KaaS offering deploys Elasticsearch, Fluentd, and Kibana by default. Also, when troubleshooting pods, you can use the kubectl logs command to inspect pod level logs.

Access Kibana information

Kibana visualizes information about your Kubernetes clusters that is collected and stored in Elasticsearch.

You can use the following commands to retrieve information about your Kubernetes cluster provided by Kibana:

- Access information about Kibana deployments in all namespaces:

$ kubectl get all --all-namespaces -l k8s-app=kibana

NAMESPACE NAME READY STATUS RESTARTS AGE

rackspace-system pod/kibana-76c4c44bcb-nmspf 1/1 Running 0 15h

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rackspace-system service/logs ClusterIP 10.3.160.207 <none> 5601/TCP 15h

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

rackspace-system deployment.extensions/kibana 1 1 1 1 15h

NAMESPACE NAME DESIRED CURRENT READY AGE

rackspace-system replicaset.extensions/kibana-76c4c44bcb 1 1 1 15h

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

rackspace-system deployment.apps/kibana 1 1 1 1 15h

NAMESPACE NAME DESIRED CURRENT READY AGE

rackspace-system replicaset.apps/kibana-76c4c44bcb 1 1 1 15h

- By default, Kibana is configured with an ingress resource that enables inbound connections reach Kubernetes services. Each Kubernetes cluster has an ingress fully qualified domain name (FQDN) at

kibana.${K8S_CLUSTER_NAME}.pug.systems. You can view information about ingress resources by running the following command:

$ kubectl get ingress --all-namespaces

NAMESPACE NAME HOSTS ADDRESS PORTS AGE

monitoring prometheus * 80 15h

rackspace-system grafana * 80 15h

rackspace-system kibana * 80 15h

rackspace-system prometheus * 80 15h

View information about the Kibana ingress resource by running the following command:

$ kubectl describe ingress -n rackspace-system kibana

System response:

Name: kibana

Namespace: rackspace-system

Address:

Default backend: default-http-backend:80 (<none>)

Rules:

Host Path Backends

---- ---- --------

*

/logs logs:5601 (<none>)

Annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/rewrite-target: /

nginx.ingress.kubernetes.io/ssl-redirect: false

kubectl.kubernetes.io/last-applied-configuration: {"apiVersion":"extensions/v1beta1","kind":"Ingress","metadata":{"annotations":{"nginx.ingress.kubernetes.io/auth-realm":"Kibana Basic Authentication","nginx.ingress.kubernetes.io/auth-secret":"basic-auth-access","nginx.ingress.kubernetes.io/auth-type":"basic","nginx.ingress.kubernetes.io/rewrite-target":"/","nginx.ingress.kubernetes.io/ssl-redirect":"false"},"labels":{"k8s-app":"kibana"},"name":"kibana","namespace":"rackspace-system"},"spec":{"rules":[{"http":{"paths":[{"backend":{"serviceName":"logs","servicePort":5601},"path":"/logs"}]}}]}}

nginx.ingress.kubernetes.io/auth-realm: Kibana Basic Authentication

nginx.ingress.kubernetes.io/auth-secret: basic-auth-access

Events: <none>

- Access the Kibana web user interface (WUI) at

https://kibana.${DOMAIN}. Use the credentials stored in PasswordSafe.

Analyze pod logs

Sometimes issues occur with specific pods. For example, a pod might be in a restart loop because the health check is failing. You can check pod logs in Kibana or you can check pod logs from the Kubernetes cluster directly.

To analyze pod issues, use the following instructions:

- Get information about pods:

kubectl get pods

For example, you have the following pod with errors:

rackspace-system po/elasticsearch-3003189550-9k8nz 0/1 Error 26 4h

- Search the log file for the error information about this pod:

$ kubectl logs po/elasticsearch-3003189550-9k8nz -n rackspace-system

System response:

[2017-06-21T23:35:15,680][INFO ][o.e.n.Node ] [] initializing ...

[2017-06-21T23:35:15,826][INFO ][o.e.e.NodeEnvironment ] [f_DBfoM] using [1] data paths, mounts [[/ (overlay)]], net usable_space [86.3gb], net total_space [94.5gb], spins? [unknown], types [overlay]

[2017-06-21T23:35:15,827][INFO ][o.e.e.NodeEnvironment ] [f_DBfoM] heap size [371.2mb], compressed ordinary object pointers [true]

[2017-06-21T23:35:15,830][INFO ][o.e.n.Node ] node name [f_DBfoM] derived from node ID [f_DBfoMATZS89ap-852SWQ]; set [node.name] to override

[2017-06-21T23:35:15,830][INFO ][o.e.n.Node ] version[5.3.0], pid[1], build[3adb13b/2017-03-23T03:31:50.652Z], OS[Linux/4.11.6-coreos/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/1.8.0_92-internal/25.92-b14]

[2017-06-21T23:35:19,067][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [aggs-matrix-stats]

[2017-06-21T23:35:19,067][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [ingest-common]

[2017-06-21T23:35:19,068][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [lang-expression]

[2017-06-21T23:35:19,068][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [lang-groovy]

[2017-06-21T23:35:19,068][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [lang-mustache]

[2017-06-21T23:35:19,068][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [lang-painless]

[2017-06-21T23:35:19,069][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [percolator]

[2017-06-21T23:35:19,069][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [reindex]

[2017-06-21T23:35:19,069][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [transport-netty3]

[2017-06-21T23:35:19,069][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded module [transport-netty4]

[2017-06-21T23:35:19,070][INFO ][o.e.p.PluginsService ] [f_DBfoM] loaded plugin [x-pack]

[2017-06-21T23:35:24,974][INFO ][o.e.n.Node ] initialized

[2017-06-21T23:35:24,975][INFO ][o.e.n.Node ] [f_DBfoM] starting ...

[2017-06-21T23:35:25,393][WARN ][i.n.u.i.MacAddressUtil ] Failed to find a usable hardware address from the network interfaces; using random bytes: 80:8d:a8:63:81:52:00:ce

[2017-06-21T23:35:25,518][INFO ][o.e.t.TransportService ] [f_DBfoM] publish_address {10.2.4.10:9300}, bound_addresses {[::]:9300}

[2017-06-21T23:35:25,531][INFO ][o.e.b.BootstrapChecks ] [f_DBfoM] bound or publishing to a non-loopback or non-link-local address, enforcing bootstrap checks

ERROR: bootstrap checks failed

max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

[2017-06-21T23:35:25,575][INFO ][o.e.n.Node ] [f_DBfoM] stopping ...

[2017-06-21T23:35:25,665][INFO ][o.e.n.Node ] [f_DBfoM] stopped

[2017-06-21T23:35:25,666][INFO ][o.e.n.Node ] [f_DBfoM] closing ...

[2017-06-21T23:35:25,691][INFO ][o.e.n.Node ] [f_DBfoM] closed

In the output above, the pod is failing because of the following error:

ERROR: bootstrap checks failed max virtual memory areas vm.max_map_count

[65530] is too low, increase to at least [262144].

When you see this error, you might need to investigate the deployment or pod YAML.

- View the Ingress Controller pod log tail:

$ kubectl logs nginx-ingress-controller-294216488-ncfq9 -n rackspace-system --tail=3

::ffff:172.99.99.10 - [::ffff:172.99.99.10] - - [23/Jun/2017:18:08:18 +0000] "POST /logs/api/monitoring/v1/clusters HTTP/2.0" 200 974 "https://148.62.13.65/logs/app/monitoring" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.12; rv:45.0) Gecko/20100101 Firefox/45.0" 117 0.124 [kube-system-logs-5601] 10.2.0.3:5601 751 0.124 200"

::ffff:172.99.99.10 - [::ffff:172.99.99.10] - - [23/Jun/2017:18:08:33 +0000] "GET /logs/api/reporting/jobs/list_completed_since?since=2017-06-23T17:26:26.119Z HTTP/2.0" 200 262 "https://148.62.13.65/logs/app/monitoring" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.12; rv:45.0) Gecko/20100101 Firefox/45.0" 66 0.024 [kube-system-logs-5601] 10.2.0.3:5601 37 0.024 200"

::ffff:172.99.99.10 - [::ffff:172.99.99.10] - - [23/Jun/2017:18:08:33 +0000] "POST /logs/api/monitoring/v1/clusters HTTP/2.0" 200 974 "https://148.62.13.65/logs/app/monitoring" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.12; rv:45.0) Gecko/20100101 Firefox/45.0" 117 0.160 [kube-system-logs-5601] 10.2.0.3:5601 751 0.160 200"

- To get detailed output, run the

kubectl logscommand in the verbose mode:

$ kubectl logs nginx-ingress-controller-294216488-ncfq9 -n rackspace-system --tail=3 --v=99

System response

I0623 13:10:51.867425 35338 loader.go:354] Config loaded from file /Users/shan5490/code/kubernetes/managed-kubernetes/kubernetes-installer-myfork/clusters/shanec-cluster/generated/auth/kubeconfig

I0623 13:10:51.868737 35338 cached_discovery.go:118] returning cached discovery info from /Users/shan5490/.kube/cache/discovery/shanec_cluster_k8s.pug.systems_443/servergroups.json

I0623 13:10:51.874469 35338 cached_discovery.go:118] returning cached discovery info from /Users/shan5490/.kube/cache/discovery/shanec_cluster_k8s.pug.systems_443/servergroups.json

...

I0623 13:10:51.874602 35338 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/json, */*" -H "User-Agent: kubectl/v1.6.6 (darwin/amd64) kubernetes/7fa1c17" https://shanec-cluster-k8s.pug.systems:443/api/v1/namespaces/kube-system/pods/nginx-ingress-controller-294216488-ncfq9

I0623 13:10:52.044683 35338 round_trippers.go:417] GET https://shanec-cluster-k8s.pug.systems:443/api/v1/namespaces/kube-system/pods/nginx-ingress-controller-294216488-ncfq9 200 OK in 170 milliseconds

I0623 13:10:52.044731 35338 round_trippers.go:423] Response Headers:

I0623 13:10:52.044744 35338 round_trippers.go:426] Content-Type: application/json

I0623 13:10:52.044751 35338 round_trippers.go:426] Content-Length: 3396

I0623 13:10:52.044757 35338 round_trippers.go:426] Date: Fri, 23 Jun 2017 18:10:52 GMT

I0623 13:10:52.045303 35338 request.go:991] Response Body: {"kind":"Pod","apiVersion":"v1","metadata":{"name":"nginx-ingress-controller-294216488-ncfq9","generateName":"nginx-ingress-controller-294216488-","namespace":"kube-system","selfLink":"/api/v1/namespaces/kube-system/pods/nginx-ingress-controller-294216488-ncfq9","uid":"49f7b2f2-5833-11e7-b991-fa163e0178f4","resourceVersion":"1584","creationTimestamp":"2017-06-23T16:44:56Z","labels":{"k8s-app":"nginx-ingress-controller","pod-template-hash":"294216488"},"annotations":{"kubernetes.io/created-by":"{\"kind\":\"SerializedReference\",\"apiVersion\":\"v1\",\"reference\":{\"kind\":\"ReplicaSet\",\"namespace\":\"kube-system\",\"name\":\"nginx-ingress-controller-294216488\",\"uid\":\"49ed0172-5833-11e7-b991-fa163e0178f4\",\"apiVersion\":\"extensions\",\"resourceVersion\":\"1383\"}}\n"},"ownerReferences":[{"apiVersion":"extensions/v1beta1","kind":"ReplicaSet","name":"nginx-ingress-controller-294216488","uid":"49ed0172-5833-11e7-b991-fa163e0178f4","controller":true,"blockOwnerDeletion":true}]},"spec":{"volumes":[{"name":"default-token-b6f5v","secret":{"secretName":"default-token-b6f5v","defaultMode":420}}],"containers":[{"name":"nginx-ingress-controller","image":"gcr.io/google_containers/nginx-ingress-controller:0.9.0-beta.3","args":["/nginx-ingress-controller","--default-backend-service=$(POD_NAMESPACE)/default-http-backend"],"ports":[{"hostPort":80,"containerPort":80,"protocol":"TCP"},{"hostPort":443,"containerPort":443,"protocol":"TCP"}],"env":[{"name":"POD_NAME","valueFrom":{"fieldRef":{"apiVersion":"v1","fieldPath":"metadata.name"}}},{"name":"POD_NAMESPACE","valueFrom":{"fieldRef":{"apiVersion":"v1","fieldPath":"metadata.namespace"}}}],"resources":{},"volumeMounts":[{"name":"default-token-b6f5v","readOnly":true,"mountPath":"/var/run/secrets/kubernetes.io/serviceaccount"}],"livenessProbe":{"httpGet":{"path":"/healthz","port":10254,"scheme":"HTTP"},"initialDelaySeconds":10,"timeoutSeconds":1,"periodSeconds":10,"successThreshold":1,"failureThreshold":3},"readinessProbe":{"httpGet":{"path":"/healthz","port":10254,"scheme":"HTTP"},"timeoutSeconds":1,"periodSeconds":10,"successThreshold":1,"failureThreshold":3},"terminationMessagePath":"/dev/termination-log","terminationMessagePolicy":"File","imagePullPolicy":"IfNotPresent"}],"restartPolicy":"Always","terminationGracePeriodSeconds":60,"dnsPolicy":"ClusterFirst","serviceAccountName":"default","serviceAccount":"default","nodeName":"shanec-cluster-worker-1","hostNetwork":true,"securityContext":{},"schedulerName":"default-scheduler"},"status":{"phase":"Running","conditions":[{"type":"Initialized","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-06-23T16:44:56Z"},{"type":"Ready","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-06-23T16:45:26Z"},{"type":"PodScheduled","status":"True","lastProbeTime":null,"lastTransitionTime":"2017-06-23T16:44:56Z"}],"hostIP":"148.62.13.65","podIP":"148.62.13.65","startTime":"2017-06-23T16:44:56Z","containerStatuses":[{"name":"nginx-ingress-controller","state":{"running":{"startedAt":"2017-06-23T16:45:12Z"}},"lastState":{},"ready":true,"restartCount":0,"image":"gcr.io/google_containers/nginx-ingress-controller:0.9.0-beta.3","imageID":"docker-pullable://gcr.io/google_containers/nginx-ingress-controller@sha256:995427304f514ac1b70b2c74ee3c6d4d4ea687fb2dc63a1816be15e41cf0e063","containerID":"docker://2b93a3253696a1498dbe718b0eeb553fde2335f14a81e30837a6fe057d457264"}],"qosClass":"BestEffort"}}

I0623 13:10:52.047274 35338 round_trippers.go:398] curl -k -v -XGET -H "Accept: application/json, */*" -H "User-Agent: kubectl/v1.6.6 (darwin/amd64) kubernetes/7fa1c17" https://shanec-cluster-k8s.pug.systems:443/api/v1/namespaces/kube-system/pods/nginx-ingress-controller-294216488-ncfq9/log?tailLines=3

I0623 13:10:52.082334 35338 round_trippers.go:417] GET https://shanec-cluster-k8s.pug.systems:443/api/v1/namespaces/kube-system/pods/nginx-ingress-controller-294216488-ncfq9/log?tailLines=3 200 OK in 35 milliseconds

I0623 13:10:52.082358 35338 round_trippers.go:423] Response Headers:

I0623 13:10:52.082364 35338 round_trippers.go:426] Content-Type: text/plain

I0623 13:10:52.082368 35338 round_trippers.go:426] Content-Length: 1057

I0623 13:10:52.082372 35338 round_trippers.go:426] Date: Fri, 23 Jun 2017 18:10:52 GMT

::ffff:172.99.99.10 - [::ffff:172.99.99.10] - - [23/Jun/2017:18:10:37 +0000] "GET /logs/api/reporting/jobs/list_completed_since?since=2017-06-23T17:26:26.119Z HTTP/2.0" 200 262 "https://148.62.13.65/logs/app/monitoring" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.12; rv:45.0) Gecko/20100101 Firefox/45.0" 66 0.014 [kube-system-logs-5601] 10.2.0.3:5601 37 0.014 200"

::ffff:172.99.99.10 - [::ffff:172.99.99.10] - - [23/Jun/2017:18:10:46 +0000] "POST /logs/api/monitoring/v1/clusters HTTP/2.0" 200 973 "https://148.62.13.65/logs/app/monitoring" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.12; rv:45.0) Gecko/20100101 Firefox/45.0" 117 0.177 [kube-system-logs-5601] 10.2.0.3:5601 750 0.177 200"

::ffff:172.99.99.10 - [::ffff:172.99.99.10] - - [23/Jun/2017:18:10:47 +0000] "GET /logs/api/reporting/jobs/list_completed_since?since=2017-06-23T17:26:26.119Z HTTP/2.0" 200 262 "https://148.62.13.65/logs/app/monitoring" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10.12; rv:45.0) Gecko/20100101 Firefox/45.0" 66 0.013 [kube-system-logs-5601] 10.2.0.3:5601 37 0.013 200"

- If the pod has been restarted previously, check the previous container’s logs with the

--previousflag.

$ kubectl describe po/etcd-operator-4083686351-rh6x7 -n kube-system | grep -A6 'State'

State: Running

Started: Fri, 23 Jun 2017 11:45:06 -0500

Last State: Terminated

Reason: Error

Exit Code: 1

Started: Mon, 01 Jan 0001 00:00:00 +0000

Finished: Fri, 23 Jun 2017 11:45:05 -0500

Ready: True

Restart Count: 1

$ kubectl logs --previous po/etcd-operator-4083686351-rh6x7 -n kube-system

time="2017-06-23T16:42:48Z" level=info msg="etcd-operator Version: 0.3.0"

time="2017-06-23T16:42:48Z" level=info msg="Git SHA: d976dc4"

time="2017-06-23T16:42:48Z" level=info msg="Go Version: go1.8.1"

time="2017-06-23T16:42:48Z" level=info msg="Go OS/Arch: linux/amd64"

time="2017-06-23T16:43:12Z" level=info msg="starts running from watch version: 0" pkg=controller

time="2017-06-23T16:43:12Z" level=info msg="start watching at 0" pkg=controller

time="2017-06-23T16:43:19Z" level=info msg="creating cluster with Spec (spec.ClusterSpec{Size:1, Version:\"3.1.6\", Paused:false, Pod:(*spec.PodPolicy)(0xc420240e40), Backup:(*spec.BackupPolicy)(nil), Restore:(*spec.RestorePolicy)(nil), SelfHosted:(*spec.SelfHostedPolicy)(0xc420116f70), TLS:(*spec.TLSPolicy)(nil)}), Status (spec.ClusterStatus{Phase:\"Creating\", Reason:\"\", ControlPaused:false, Conditions:[]spec.ClusterCondition(nil), Size:0, Members:spec.MembersStatus{Ready:[]string(nil), Unready:[]string(nil)}, CurrentVersion:\"\", TargetVersion:\"\", BackupServiceStatus:(*spec.BackupServiceStatus)(nil)})" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:43:19Z" level=info msg="migrating boot member (http://10.3.0.200:12379)" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:43:28Z" level=info msg="self-hosted cluster created with boot member (http://10.3.0.200:12379)" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:43:28Z" level=info msg="wait 1m0s before removing the boot member" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:43:28Z" level=info msg="start running..." cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:43:36Z" level=error msg="failed to update members: skipping update members for self hosted cluster: waiting for the boot member (boot-etcd) to be removed..." cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:43:44Z" level=error msg="failed to update members: skipping update members for self hosted cluster: waiting for the boot member (boot-etcd) to be removed..." cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:43:52Z" level=error msg="failed to update members: skipping update members for self hosted cluster: waiting for the boot member (boot-etcd) to be removed..." cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:00Z" level=error msg="failed to update members: skipping update members for self hosted cluster: waiting for the boot member (boot-etcd) to be removed..." cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:08Z" level=error msg="failed to update members: skipping update members for self hosted cluster: waiting for the boot member (boot-etcd) to be removed..." cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:16Z" level=error msg="failed to update members: skipping update members for self hosted cluster: waiting for the boot member (boot-etcd) to be removed..." cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:24Z" level=error msg="failed to update members: skipping update members for self hosted cluster: waiting for the boot member (boot-etcd) to be removed..." cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:35Z" level=info msg="Start reconciling" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:35Z" level=info msg="Finish reconciling" cluster-name=kube-etcd pkg=cluster

E0623 16:44:36.200993 1 election.go:259] Failed to update lock: etcdserver: request timed out, possibly due to previous leader failure

time="2017-06-23T16:44:43Z" level=info msg="Start reconciling" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:43Z" level=info msg="Finish reconciling" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:51Z" level=info msg="Start reconciling" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:51Z" level=info msg="Finish reconciling" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:59Z" level=info msg="Start reconciling" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:44:59Z" level=info msg="Finish reconciling" cluster-name=kube-etcd pkg=cluster

time="2017-06-23T16:45:05Z" level=error msg="received invalid event from API server: fail to decode raw event from apiserver (unexpected EOF)" pkg=controller

time="2017-06-23T16:45:05Z" level=fatal msg="controller Run() ended with failure: fail to decode raw event from apiserver (unexpected EOF)"

Attach to a pod

You can attach to a pod for troubleshooting purposes. However, do not make changes or fix issues through attaching. If a pod is not working as intended, investigate the image, YAML configuration file, or system needs.

- Run

/bin/bashfrom the NGINX pod:

$ kubectl -n rackspace-system exec -it nginx-ingress-controller-294216488-ncfq9 -- /bin/bash

- If

bashfails, trysh:

$ kubectl -n rackspace-system exec -it nginx-ingress-controller-294216488-ncfq9 -- sh`

Now you can perform a few simple operations wit. the pod.

- View the list of directories and files:

root@shanec-cluster-worker-1:/# ls -la /

total 26936

drwxr-xr-x. 1 root root 4096 Jun 23 16:45 .

drwxr-xr-x. 1 root root 4096 Jun 23 16:45 ..

-rwxr-xr-x. 1 root root 0 Jun 23 16:45 .dockerenv

-rw-r-----. 2 root root 1194 Feb 22 17:39 Dockerfile

drwxr-xr-x. 2 root root 4096 Jun 23 16:45 bin

drwxr-xr-x. 2 root root 4096 Apr 12 2016 boot

drwxr-xr-x. 5 root root 380 Jun 23 16:45 dev

drwxr-xr-x. 1 root root 4096 Jun 23 16:45 etc

drwxr-xr-x. 2 root root 4096 Apr 12 2016 home

drwxr-x---. 1 root root 4096 Jun 23 16:45 ingress-controller

drwxr-xr-x. 5 root root 4096 Jun 23 16:45 lib

drwxr-xr-x. 2 root root 4096 Jun 23 16:45 lib64

drwxr-xr-x. 2 root root 4096 Jan 19 16:31 media

drwxr-xr-x. 2 root root 4096 Jan 19 16:31 mnt

-rwxr-x---. 2 root root 27410080 Mar 14 21:46 nginx-ingress-controller

drwxr-xr-x. 2 root root 4096 Jan 19 16:31 opt

dr-xr-xr-x. 126 root root 0 Jun 23 16:45 proc

drwx------. 1 root root 4096 Jun 24 23:30 root

drwxr-xr-x. 1 root root 4096 Jun 23 16:45 run

drwxr-xr-x. 2 root root 4096 Mar 14 21:46 sbin

drwxr-xr-x. 2 root root 4096 Jan 19 16:31 srv

dr-xr-xr-x. 13 root root 0 Jun 23 16:39 sys

drwxrwxrwt. 1 root root 4096 Jun 24 23:30 tmp

drwxr-xr-x. 10 root root 4096 Jun 23 16:45 usr

drwxr-xr-x. 1 root root 4096 Jun 23 16:45 var

- Display the information about disk space:

root@shanec-cluster-worker-1:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 95G 3.2G 88G 4% /

tmpfs 2.0G 0 2.0G 0% /dev

tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/vda9 95G 3.2G 88G 4% /etc/hosts

shm 64M 0 64M 0% /dev/shm

tmpfs 2.0G 12K 2.0G 1% /run/secrets/kubernetes.io/serviceaccount

- Display the information about the Linux distribution:

root@shanec-cluster-worker-1:/# cat /etc/lsb-release

DISTRIB_ID=Ubuntu

DISTRIB_RELEASE=16.04

DISTRIB_CODENAME=xenial

DISTRIB_DESCRIPTION="Ubuntu 16.04.2 LTS"

Run single commands

You can run single commands to perform operations with pods:

$ kubectl -n kube-system exec nginx-ingress-controller-294216488-ncfq9 ls /var/log/nginx

access.log

error.log

Check the running configuration

When troubleshooting a Kubernetes cluster, you might want to check the YAML configuration file that the deployment uses.

To check the deployment YAML file, run the following command:

$ kubectl get deploy/kibana -o yaml -n rackspace-system > deployment-kibana.yaml

System response:

$ cat deployment-kibana.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"extensions/v1beta1","kind":"Deployment","metadata":{"annotations":{},"labels":{"k8s-app":"kibana"},"name":"kibana","namespace":"kube-system"},"spec":{"replicas":1,"template":{"metadata":{"labels":{"k8s-app":"kibana"}},"spec":{"affinity":{"podAntiAffinity":{"requiredDuringSchedulingIgnoredDuringExecution":[{"labelSelector":{"matchExpressions":[{"key":"k8s-app","operator":"In","values":["kibana"]}]},"topologyKey":"kubernetes.io/hostname"}]}},"containers":[{"env":[{"name":"ELASTICSEARCH_URL","value":"http://elasticsearch-logging:9200"},{"name":"SERVER_BASEPATH","value":"/logs"}],"image":"docker.elastic.co/kibana/kibana:5.3.0","name":"kibana","ports":[{"containerPort":5601,"name":"ui","protocol":"TCP"}],"readinessProbe":{"httpGet":{"path":"/api/status","port":5601},"initialDelaySeconds":90,"periodSeconds":60},"resources":{"requests":{"cpu":"100m"}}}]}}}}

creationTimestamp: 2017-06-23T16:44:37Z

generation: 1

labels:

k8s-app: kibana

name: kibana

namespace: rackspace-system

resourceVersion: "2595"

selfLink: /apis/extensions/v1beta1/namespaces/kube-system/deployments/kibana

uid: 3ec4a8d0-5833-11e7-acfc-fa163e1f6ecf

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 1

type: RollingUpdate

template:

metadata:

creationTimestamp: null

labels:

k8s-app: kibana

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values:

- kibana

topologyKey: kubernetes.io/hostname

containers:

- env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch-logging:9200

- name: SERVER_BASEPATH

value: /logs

image: docker.elastic.co/kibana/kibana:5.3.0

imagePullPolicy: IfNotPresent

name: kibana

ports:

- containerPort: 5601

name: ui

protocol: TCP

readinessProbe:

failureThreshold: 3

httpGet:

path: /api/status

port: 5601

scheme: HTTP

initialDelaySeconds: 90

periodSeconds: 60

successThreshold: 1

timeoutSeconds: 1

resources:

requests:

cpu: 100m

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

restartPolicy: Always

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

status:

availableReplicas: 1

conditions:

- lastTransitionTime: 2017-06-23T16:44:56Z

lastUpdateTime: 2017-06-23T16:44:56Z

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

observedGeneration: 1

readyReplicas: 1

replicas: 1

updatedReplicas: 1

Namespaces

Kubernetes uses namespaces to isolate Kubernetes resources from each other and manage access control. Namespaces are virtual clusters that provide scope for names and cluster resource management. When you run a command, you need to specify in which namespace a resource is located.

By default, Rackspace KaaS has the following Kubernetes namespaces:

- default

- kube-public

- kube-system

- monitoring

- rackspace-system

- tectonic-system

You can use the following commands to get information about Kubernetes namespaces:

- Get the list of namespaces:

$ kubectl get namespace

NAME STATUS AGE

default Active 15h

kube-public Active 15h

kube-system Active 15h

monitoring Active 15h

rackspace-system Active 15h

tectonic-system Active 15h

- Get the list of pods:

$ kubectl get pods

No resources found.

- Get the list of pods for the

kube-systemnamespace:

$ kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

default-http-backend-2198840601-phqnn 1/1 Running 0 1d

elasticsearch-3003189550-91mvh 1/1 Running 0 1d

elasticsearch-3003189550-l3gds 1/1 Running 0 1d

elasticsearch-3003189550-pz8zn 1/1 Running 0 1d

etcd-operator-4083686351-rh6x7 1/1 Running 1 1d

...

Failure domains

The following list describes significant failure domains and issues in a Kubernetes cluster deployment:

- etcd

- Susceptible to network latency

- Maintain 50%+1 availabity (will go read-only if fall below threshold)

- Controller manager and scheduler

- Controller manager fails: cloud provider integrations, such as neutron LBaaS stop working.

- Controller manager and scheduler fail: Deployments and so on cannot scale. Failed node workloads do not reschedule.

Troubleshooting unresponsive Kubernetes services

This section describes troubleshooting tasks for Rackapace KaaS managed services.

kube-scheduler

Kubernetes scheduler plays an important role in ensuring Kubernetes resource availability and performance. Verifying that Kubernetes scheduler is operational is one of the essential steps of cluster troubleshooting.

No scheduler pods available

When a Kubernetes service is unavailable, you can use kube-scheduler to troubleshoot the problem. To simulate a scenario where no scheduler pods are available, scale the deployment to zero replicas so that Kubernetes cannot schedule or reschedule any additional pods.

Note: You can use this method with other services, such as kube-controller-manager.

To troubleshoot an unavailable Kubernetes service, complete the following steps:

- List all

kube-schedulerresources in thekube-systemnamespace:

$ kubectl get all -l k8s-app=kube-scheduler -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-scheduler 3 3 3 3 2d

NAME DESIRED CURRENT READY AGE

rs/kube-scheduler-774c4578b7 3 3 3 2d

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-scheduler 3 3 3 3 2d

NAME DESIRED CURRENT READY AGE

rs/kube-scheduler-774c4578b7 3 3 3 2d

NAME READY STATUS RESTARTS AGE

po/kube-scheduler-774c4578b7-jrd9s 1/1 Running 0 2d

po/kube-scheduler-774c4578b7-jtqcl 1/1 Running 0 2d

po/kube-scheduler-774c4578b7-nprqb 1/1 Running 0 2d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-scheduler-prometheus-discovery ClusterIP None <none> 10251/TCP 2d

- Scale

kube-schedulerto zero replicas:

$ kubectl scale deploy kube-scheduler --replicas 0 -n kube-system

deployment "kube-scheduler" scaled

- List all resources for

kube-scheduler:

$ kubectl get all -l k8s-app=kube-scheduler -n kube-system

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-scheduler 0 0 0 0 2d

NAME DESIRED CURRENT READY AGE

rs/kube-scheduler-774c4578b7 0 0 0 2d

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deploy/kube-scheduler 0 0 0 0 2d

NAME DESIRED CURRENT READY AGE

rs/kube-scheduler-774c4578b7 0 0 0 2d

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kube-scheduler-prometheus-discovery ClusterIP None <none> 10251/TCP 2d

Kubernetes scheduler is now unavailable. To fix this you can act as a scheduler.

- Save the

kube-schedulerconfiguration in a*.yamlfile:

kubectl get deploy kube-scheduler -n kube-system -o yaml > scheduler.yaml

- View the

scheduler.yamlfile:

$ cat scheduler.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

annotations:

deployment.kubernetes.io/revision: "1"

creationTimestamp: 2017-10-04T18:52:20Z

generation: 2

labels:

k8s-app: kube-scheduler

tectonic-operators.coreos.com/managed-by: kube-version-operator

tier: control-plane

name: kube-scheduler

namespace: kube-system

resourceVersion: "413557"

selfLink: /apis/extensions/v1beta1/namespaces/kube-system/deployments/kube-scheduler

uid: 26cb400d-a935-11e7-9e40-fa163ece5424

spec:

replicas: 0

selector:

matchLabels:

k8s-app: kube-scheduler

tier: control-plane

strategy:

rollingUpdate:

maxSurge: 1

maxUnavailable: 0

type: RollingUpdate

template:

metadata:

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

creationTimestamp: null

labels:

k8s-app: kube-scheduler

pod-anti-affinity: kube-scheduler-1.7.5-tectonic.1

tectonic-operators.coreos.com/managed-by: kube-version-operator

tier: control-plane

spec:

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

pod-anti-affinity: kube-scheduler-1.7.5-tectonic.1

namespaces:

- kube-system

topologyKey: kubernetes.io/hostname

containers:

- command:

- ./hyperkube

- scheduler

- --leader-elect=true

image: quay.io/coreos/hyperkube:v1.8.0_coreos.0

imagePullPolicy: IfNotPresent

livenessProbe:

failureThreshold: 3

httpGet:

path: /healthz

port: 10251

scheme: HTTP

initialDelaySeconds: 15

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 15

name: kube-scheduler

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

dnsPolicy: ClusterFirst

nodeSelector:

node-role.kubernetes.io/master: ""

restartPolicy: Always

schedulerName: default-scheduler

securityContext:

runAsNonRoot: true

runAsUser: 65534

terminationGracePeriodSeconds: 30

tolerations:

- key: CriticalAddonsOnly

operator: Exists

- effect: NoSchedule

key: node-role.kubernetes.io/master

operator: Exists

status:

conditions:

- lastTransitionTime: 2017-10-04T18:53:34Z

lastUpdateTime: 2017-10-04T18:53:34Z

message: Deployment has minimum availability.

reason: MinimumReplicasAvailable

status: "True"

type: Available

observedGeneration: 2

- Change the kind of resource from

DeploymenttoPod, addnodeNamewhich must be one of the Kubernetes master nodes, and remove all the unnecessary parameters:

$ cat scheduler.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

k8s-app: kube-scheduler

tectonic-operators.coreos.com/managed-by: kube-version-operator

tier: control-plane

name: kube-scheduler

namespace: kube-system

uid: 26cb400d-a935-11e7-9e40-fa163ece5424

spec:

nodeName: test-cluster-master-0

containers:

- command:

- ./hyperkube

- scheduler

- --leader-elect=true

image: quay.io/coreos/hyperkube:v1.8.0_coreos.0